06.10

Part 1 of this article talked about how I found the bytecode files and the interpreter assembly code. Let's go further and try to understand how these bytecode files are structured.

We now have 188MB of JSON describing the CPU state when an instruction tries to access either the bytecode file in memory or the interpreter state. However, it is not readable at all:

{'type':'r', 'size':4, 'addr':'016E1964', 'val': '00007D40', 'pc':'80091DB8'

{'type':'r', 'size':4, 'addr':'016E00F4', 'val': '11000000', 'pc':'80091DBC'

{'type':'w', 'size':4, 'addr':'016E1964', 'val': '00007D44', 'pc':'80091DC8'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092720'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80092724'

{'type':'r', 'size':4, 'addr':'016E1964', 'val': '00007D44', 'pc':'80092730'

{'type':'r', 'size':4, 'addr':'016E00F8', 'val': '00000000', 'pc':'80092734'

{'type':'w', 'size':4, 'addr':'016E1964', 'val': '00007D48', 'pc':'8009273C'

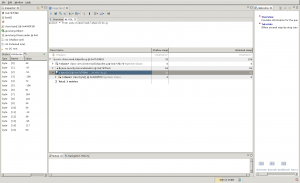

We need to enhance this by using the informations we already have on the interpreter behavior (where PC is stored, where is the instruction dispatcher, etc.). I wrote a very simple script called filter.py, which iterates on each line, loads the JSON object as a Python dict, applies a list of filters to it and prints the filtered line. Here is an example of a filter which detects lines in the dump from the instruction dispatcher:

(lambda d: d['pc'] == '80091DBC',

'ReadInstr: %(val)s at pc=%(r3)s (@ %(pc)s)',

'blue'),

It's a tuple with a predicate function, a format string which will be formatted using the JSON object itself, and a color which is translated to the equivalent ANSI color code (for blue, it is \e[34m). We can also write filters for instructions trying to access the script bytecode, and instructions manipulating the Program Counter (PC):

(lambda d: (int(d['addr'], 16) - 0x016E1964) % 5152 == 0

and int(d['addr'], 16) >= 0x016E195C

and d['type'] == 'r',

' GetPC: %(val)s at addr=%(addr)s (@ %(pc)s)',

'green'),

(lambda d: (int(d['addr'], 16) - 0x016E1964) % 5152 == 0

and int(d['addr'], 16) >= 0x016E195C

and d['type'] == 'w',

' SetPC: %(val)s at addr=%(addr)s (@ %(pc)s)',

'red'),

(lambda d: 0 <= int(d['off'], 16) < 0x8191,

'SoAccess: type=%(type)s val=%(val)s at off=%(off)s (@ %(pc)s)',

'yellow'),

Now that we know where the PC is stored, a first step would we to locate the control flow handling instructions. I took all of the ReadInstr lines from the dump and analyzed the PC value to see which instruction was doing jumps, i.e. after which instruction is the PC at least 0x10 more or less than its previous value. I won't paste the script here (it's easy to code but a bit long), but it was of a great use, finding only four instructions modifying the control flow. Opcodes starting by 05, 06, 08 and 09 all modified at least at one time in the dump the value of PC. Looking at the dump, 05 and 06 seems to do a lot of stuff, storing and loading from addresses we don't know about yet, but analyzing the PC after and before those opcodes, we can determine easily enough that they are the usual CALL/RET instructions. For example:

Opcode 05 at 7D48, jumping to 742C

Opcode 05 at 7434, jumping to 6FA0

Opcode 05 at 6FB8, jumping to 6D8C

Opcode 06 at 6D88, jumping to 6FC0

Opcode 06 at 6F9C, jumping to 743C

See how the jump address from opcode 06 are always just after an opcode 05? Looking at the dump a bit closer, we can also see that op05 writes its next instruction PC somewhere in the interpreter state (let's now call this somewhere "the stack"), and op06 reads it from exactly the same place! Let's look at opcode 08 now in the memory accesses dump:

ReadInstr: 08000000 at pc=00006E24 (@ 80091DBC)

SetPC: 00006E28 at addr=016E1964 (@ 80091DC8)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'800923EC'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'800923F0'

GetPC: 00006E28 at addr=016E1964 (@ 800923FC)

SoAccess: type=r val=00006D80 at off=00006E48 (@ 80092400)

SetPC: 00006E2C at addr=016E1964 (@ 80092408)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'8009240C'

SetPC: 00006D80 at addr=016E1964 (@ 80092418)

So... it reads instruction 8, adds 4 to the PC, reads a word from the bytecode, adds 4 to the PC, then sets the PC to the word read in the bytecode. In other words, after executing 08000000 12345678, PC=12345678. That's an absolute jump, which seems unconditional: every time an opcode 08 is encountered in the dump, PC is modified. That means opcode 09 is most likely a conditional jump: they are almost always used to implement loops and if statements. Two parts of the dump related to opcode 09 seems to confirm that:

ReadInstr: 09000000 at pc=00000500 (@ 80091DBC)

SetPC: 00000504 at addr=016E1964 (@ 80091DC8)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092420'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80092424'

GetPC: 00000504 at addr=016E1964 (@ 80092430)

SoAccess: type=r val=00000564 at off=00000524 (@ 80092434)

SetPC: 00000508 at addr=016E1964 (@ 8009243C)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092440'

{'type':'r', 'size':4, 'addr':'016E115C', 'val': '00000000', 'pc':'8009244C'

{'type':'r', 'size':4, 'addr':'016E055C', 'val': '00000000', 'pc':'80092458'

SetPC: 00000564 at addr=016E1964 (@ 80092464)

{'type':'r', 'size':1, 'addr':'016E0558', 'val': '00000000', 'pc':'80091CFC'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80091D38'

{'type':'r', 'size':4, 'addr':'016E1970', 'val': '00000011', 'pc':'80091D44'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80091D78'

{'type':'r', 'size':4, 'addr':'016E1970', 'val': '00000011', 'pc':'80091D84'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80091DA8'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80091DAC'

In this case, we jumped to 0564 with the opcode 09000000 00000564. However, another part of the dump shows us that opcode 09 does not always jump:

ReadInstr: 09000000 at pc=000065A4 (@ 80091DBC)

SetPC: 000065A8 at addr=016E1964 (@ 80091DC8)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092420'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80092424'

GetPC: 000065A8 at addr=016E1964 (@ 80092430)

SoAccess: type=r val=000065EC at off=000065C8 (@ 80092434)

SetPC: 000065AC at addr=016E1964 (@ 8009243C)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092440'

{'type':'r', 'size':4, 'addr':'016E115C', 'val': '00000000', 'pc':'8009244C'

{'type':'r', 'size':4, 'addr':'016E055C', 'val': '00000001', 'pc':'80092458'

{'type':'r', 'size':1, 'addr':'016E0558', 'val': '00000000', 'pc':'80091CFC'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80091D38'

{'type':'r', 'size':4, 'addr':'016E1970', 'val': '00000011', 'pc':'80091D44'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80091D78'

{'type':'r', 'size':4, 'addr':'016E1970', 'val': '00000011', 'pc':'80091D84'

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80091DA8'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80091DAC'

See the missing SetPC? We can safely assume we know most of the behavior of the four control flow handling instructions: 05=CALL, 06=RET, 07=JUMP and 08=CJUMP. But now, we also know that there is some kind of stack used for CALL/RET. Let's add some new filters to our script in order to detect easily instructions manipulating this stack:

(lambda d: (int(d['addr'], 16) - 0x016E1160) % 5152 == 0

and int(d['addr'], 16) >= (0x016E195C - 5152)

and d['type'] == 'r',

' GetStackAddr: %(val)s at addr=%(addr)s (@ %(pc)s)',

'green'),

(lambda d: (int(d['addr'], 16) - 0x016E1160) % 5152 == 0

and int(d['addr'], 16) >= (0x016E195C - 5152)

and d['type'] == 'w',

' SetStackAddr: %(val)s at addr=%(addr)s (@ %(pc)s)',

'red'),

(lambda d: (int(d['addr'], 16) - 0x016E1968) % 5152 == 0

and int(d['addr'], 16) >= 0x016E195C

and d['type'] == 'r',

' GetStackTop: %(val)s at addr=%(addr)s (@ %(pc)s)',

'green'),

(lambda d: (int(d['addr'], 16) - 0x016E1968) % 5152 == 0

and int(d['addr'], 16) >= 0x016E195C

and d['type'] == 'w',

' SetStackTop: %(val)s at addr=%(addr)s (@ %(pc)s)',

'red'),

(lambda d: (int(d['addr'], 16) - 0x016E1164) % 5152 <= 0x800

and int(d['addr'], 16) >= (0x016E1164)

and d['type'] == 'r',

' GetStack: %(val)s at stack off %(soff)s (addr=%(addr)s @ %(pc)s)',

'yellow'),

(lambda d: (int(d['addr'], 16) - 0x016E1164) % 5152 <= 0x800

and int(d['addr'], 16) >= (0x016E1164)

and d['type'] == 'w',

' SetStack: %(val)s at stack off %(soff)s (addr=%(addr)s @ %(pc)s)',

'cyan'),

Let's re-run our filtering script on the dump and find instructions that access the stack! Opcode 11 seems to be quite small and modifies the stack top, let's look at it more closely:

ReadInstr: 11000000 at pc=0000000C (@ 80091DBC)

SetPC: 00000010 at addr=016E2D84 (@ 80091DC8)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000001', 'pc':'80092720'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80092724'

GetPC: 00000010 at addr=016E2D84 (@ 80092730)

SoAccess: type=r val=00000008 at off=00000030 (@ 80092734)

SetPC: 00000014 at addr=016E2D84 (@ 8009273C)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000001', 'pc':'80092740'

GetStackTop: 000007B8 at addr=016E2D88 (@ 8009274C)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000001', 'pc':'80092764'

GetStackTop: 000007B8 at addr=016E2D88 (@ 80092770)

SetStackTop: 000007B0 at addr=016E2D88 (@ 80092778)

ReadInstr: 11000000 at pc=00000158 (@ 80091DBC)

SetPC: 0000015C at addr=016E1964 (@ 80091DC8)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092720'

{'type':'r', 'size':4, 'addr':'016E053C', 'val': '816D83B4', 'pc':'80092724'

GetPC: 0000015C at addr=016E1964 (@ 80092730)

SoAccess: type=r val=00000000 at off=0000017C (@ 80092734)

SetPC: 00000160 at addr=016E1964 (@ 8009273C)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092740'

GetStackTop: 00000794 at addr=016E1968 (@ 8009274C)

{'type':'r', 'size':4, 'addr':'016E0544', 'val': '00000000', 'pc':'80092764'

GetStackTop: 00000794 at addr=016E1968 (@ 80092770)

SetStackTop: 00000794 at addr=016E1968 (@ 80092778)

Opcode 11 seems to read an argument just after the bytecode in the file and substracts it from the stack top. For example, 11000000 00000010 removes 0x10 from the stack top. Most of the time this is done to reserve space for local variables (for example, on x86 you do sub esp, 0x10), so let's call this instruction RESERVE. Opcode 10 seems to do almost the same thing but adds to the stack top instead of substracting, so let's call it UNRESERVE.

Another opcode, 0E, seems to write values to the stack and substracts 4 from the stack top each time it does that. In other terms, it pushes a value to the stack. But where does this value come from?

In the next part of this article, I'll talk about the scratchpad, where the temporary variables are stored for computations, and how variables are represented in this interpreter. Stay tuned!